About Barnstorm

Barnstorm VFX embodies the diverse skills, freewheeling spirit, and daredevil attitude of the early days stunt plane pilots. Nominated for VES award for their outstanding work on the TV series “The Man in the High Castle”, they have been using Blender as integral part of their pipeline.

The following text is an edited version of the answers of a Reddit AMA held by the heads of the team (Lawson Deming and Cory Jamieson) on February 3, 2017.

Getting into Blender

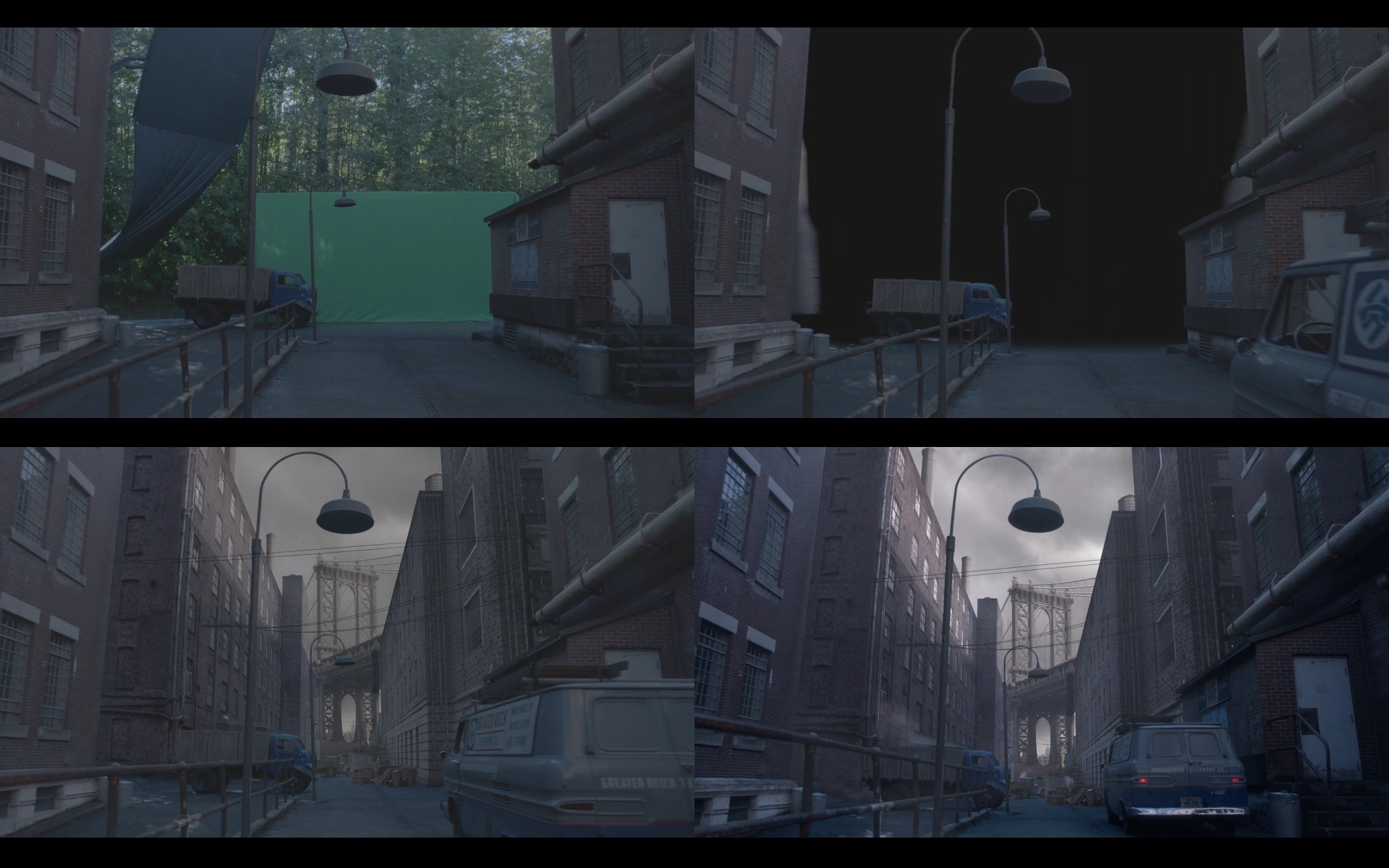

We’ve experimented with a variety of programs over the years, but for 3D work, we settled on using Blender starting about 3 years ago. It’s very unusual for VFX houses (at least in the US) to use Blender (as opposed to, say, Maya), but there are a number of great features that caused us to switch over to it. One of them was the Cycles render engine, that we’ve used for our rendering of most of the 3D elements in High Castle and other shows. In order to deal with the huge rendering needs of High Castle, we set up cloud rendering using Amazon’s own AWS servers through Deadline, which allowed us to have as many as 150 machines working at a time to render some of the big sequences.

In addition to Blender, we occasionally use other 3D programs, including Houdini for particle systems, fire, etc. Our texturing and material work is done in Substance Painter, and compositing is done in Nuke and After Effects.

The original decision to use Blender actually didn’t have anything to do with the cost (though it’s certainly helpful now that we have more people using it). We were already using Nuke and NukeX as a company (which are pretty expensive software packages) and had been using Maya for about a year. Before that, Lightwave was what we used.

Assembling a team

The real turning point came when we had to pull together a small team of freelancers to do a sequence. The process went a little bit like this:

- We hire a 3D artist to start modeling for us. He’s an experienced modeler but his background is in a studio environment where there are a lot of departments and a pretty hefty pipeline to help deal with everything. He’s nominally a Maya guy, but the studio he was at had their own custom modeling software which he’s more familiar with, so even though he’s working in Maya, it’s not his first choice.

- The modeling guy only does modeling, so we need to bring in a texture artist. She doesn’t actually use Maya for UV work or texturing. Instead she uses Mari (a Foundry product). She and the Modeler have some issues making the texturing work back and forth between Mari and Maya because they aren’t used to being outside of a studio pipeline that takes care of everything for them.

- Since neither of the above are experienced in layout or rendering, we hire a third guy to do the setup of the scene. He is a Maya guy as well, but once he starts working, he says “oh, you guys don’t have VRay? I can get by in Mental Ray (Maya’s renderer at the time) but I prefer Vray.” We spend a ton of time trying to work around Mental Ray’s idiosyncrasies, including weird behavior with the HDR lighting major gamma issues with the textures.

- We need to do some particle simulation work and smoke and create some water in the same scene… Guess who uses Maya to do these things? No one, apparently. Water and particles are Houdini in this case. Smoke is FumeFX (which at the time only existed as a 3DStudio Max plugin and had no Maya version).

So, pop quiz. What is Maya doing for us in this instance? We’ve got a modeler who is begrudgingly using it but prefers other modeling software, a texture artist who isn’t using it at all, a layout/lighter who would rather be using a third party rendering engine, and the prospect of doing SFX that will require multiple additional third party softwares totaling thousands of dollars. At the time we were attempting this, the core team of our company was just 5 people, of which I was the only one who regularly did 3D work (in Lightwave).

I consider myself a generalist and had been puttering along in Maya, but I found it very obtuse and difficult to approach from a generalist standpoint. I’d just started dabbling in Blender and found it very approachable and easy to use, with a lot of support and tutorials out there. At the same time our three freelancers were struggling with the above sequence, I managed to build and render another shot from the scene fully in Blender (a program that I was a novice in at the time), utilizing its internal smoke simulation tools and the ocean simulation toolkit (which is actually a port of the one in Houdini) to do SFX on my own, and I got a great looking render out of Cycles.

Blender has its weaknesses, and as a general 3D package, it’s not the best in any one area, but neither is Maya. Any specialty task will always be better in another program. But without a pre-existing Maya pipeline, and with the fact that Maya’s structure encourages the use of many specialists collaborating on a single task (rather than one well-rounded generalist working solo) it didn’t make sense to dump a lot of resources and money into making Maya work for such a small studio.

I ended up falling in love with working in Blender, and as we brought on and trained some other 3D artists, I encouraged them to use it. Eventually we found ourselves a Blender studio. That advantage of being good for a generalist, though, has also been a weakness as we’ve grown as a company, because it’s hard to find people who are really amazing artists in Blender. Our solution up until now has been to work hard on finding good Blender artists and to try and train others who want to learn.

Blender in production

Also, since Blender acts as a hub for VFX work, it’s still possible for specialists to contribute from their respective programs. Initial modeling, for example, can be done in almost any program. It can be difficult, but the more people from other VFX studios I talk to, the more I realize that everybody’s pipeline is pretty messy, and even the studios who are fully behind Maya use a ton of other software and have a lot of custom scripts and techniques to get everything working the way they want it to.

We use Blender for modeling, animation, and rendering. Our partners at Theory Animation have focused a lot on how to make Blender better for animation (they all came from a Maya background as well but fell in love with Blender the same way I did). We’ve used Blender’s fluid system and particle system (though both of these need work) and render everything in Cycles. We still use Houdini for the stuff that it’s good at. We used Massive to create character animations for “The Man in the High Castle”. We also started using Substance Painter and Substance Designer for texture work. Cycles is good at exporting render layers, which we composited mostly in Nuke.

One of the big hurdles that Blender has to overcome is the the fact that its licensing rules can make it difficult legally for it to interact with paid software. Most companies want to keep their code closed, so the open-source nature of Blender has made it tricky to, for example, get a Substance Designer plugin. It’s something we’re working on though.

When collaborating with other companies, we usually separate the 3d and compositing aspects of the work to keep the software issues from being a problem. It’s getting easier every day, though, especially now that Blender is starting to support Alembic. For season one, the sequence we worked on was completely separate and turnkey, so we didn’t have any issues sharing assets. For season 2, however, we did need to do a lot of conversion and re-modeling of elements. Also, many of the models we received were textured using UDIMs, which Blender does not currently support. It would be great for blender to eventually adopt the UDIM workflow for texturing.

We do get a lot of raised eyebrows from people when we tell them we use Blender professionally. Hopefully the popularity of the show (and the fact that we’ve been nominated for some VFX awards) will help remove some of the stigma that Blender has developed over the years. It’s a great program.

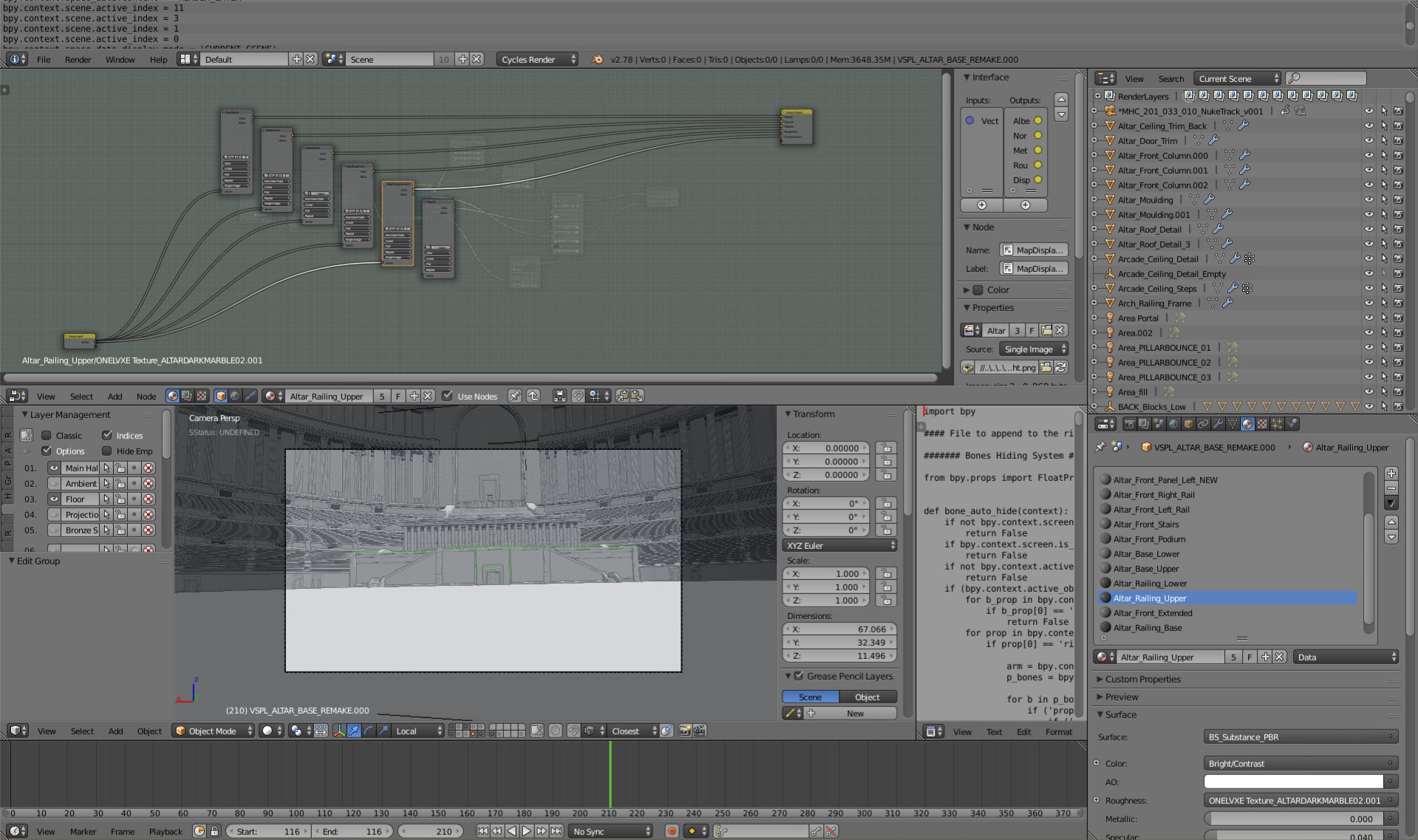

We’ve developed a number of in-house solutions for Blender. We use Blender solely for 3D and NukeX for tracking and compositing, but we hand camera data back and forth between Nuke and Blender using .chan files (that’s technically built into blender but we’ve developed a system to make it a bit easier). Fitting Blender into a compositing pipeline (Nuke, EXR workflow) is surprisingly easy. Layer render rendering, and the ease of setting up Blender have made it pretty fast for passing around assets between artists and vendors. We also have a custom procedure and PBR shader setup for working with materials out of Substance Painter in Blender. A mix of Shotgun, our own asset tracking, and a workflow based on Blender Linking with a handful of add-ons are needed to make sure everything works.

Production Design

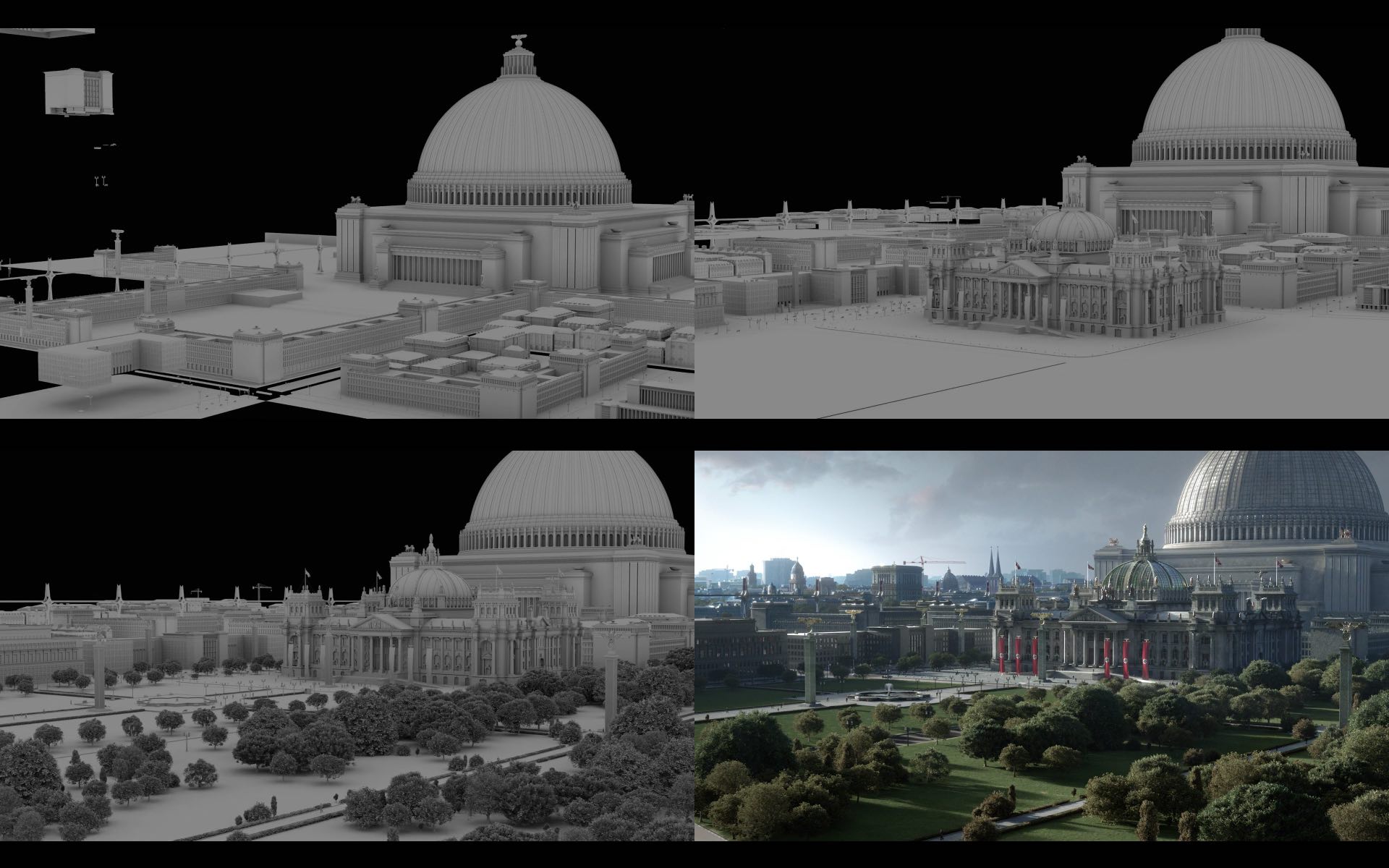

We worked really hard to make it feel correct. You can also thank the Production Designer, Andrew Boughton, who designed the practical sets in the show. He has a lot of architectural knowledge and was very collaborative with us to help make sure our designs matched the feel of the rest of the stuff in the show.

Our visual bible for Germania was a book called “Albert Speer: Architecture 1932-1942”. There were extensive and detailed plans for the transformation of Berlin, including blueprints for buildings like the Volkshalle. We did take some creative liberties with the arrangement and positioning of buildings for the sake of the narrative and to better coordinate with the production designer’s aesthetic of the sets. We looked at old film reels including the famous “Triumph of the Will” for references of how Nazi rallies were organized. One video game that I remember paying attention to was “Wolfenstein: The New Order” because it presents a world that was taken over by the Nazis, though its presentation of post war Berlin (including the Volkshalle) was much more futuristic and sci-fi-ish that what we went for. Our goal in MITHC was to create a sense of the world that felt fairly mundane and grounded in reality. The more it felt like something that could really happen, the more effective the message of the show.